Changelog v1.0.5.2-release+1.21.1

This minor update of AI player updates the mod to version 1.21.1 and also brings support for other custom API providers.

Custom OpenAI-Compatible Provider Support

This feature allows you to use alternative AI providers that are compatible with the OpenAI API standard, such as OpenRouter, TogetherAI, Perplexity, and others.

How to Use

1. Enable Custom Provider Mode

Set the system property(JVM argument) when launching the game:

-Daiplayer.llmMode=custom

2. Configure API Settings

- Firstly, delete the existing settings.json5 in the config folder (save any api keys elsewhere for the meantime)

- Open the in-game API Keys configuration screen (

/configMan) and then click the API Keys button. - Set the following fields:

- Custom API URL: The base URL of your provider (e.g.,

https://openrouter.ai/api/v1) - Custom API Key: Your API key for the provider

- Custom API URL: The base URL of your provider (e.g.,

- Hit save. In case you don't see the list of models immediately hit the "Refresh Models" button once or twice.

- If you still don't see the list of models, close the config manager, type

/configManagain and you should see the list of models available. - ollama is still required to be open in the background because of the embedding model being used, but I will separate this entirely in the next mini patch, by adding an embedding api endpoint from the providers, and also upgrading to the

embedddinggemmamodel fromnomic-embed-text

3. Select a Model

The system will automatically fetch available models from your provider's /models endpoint and display them in the model selection interface.

Supported Providers

Any provider that implements the OpenAI API standard should work. Some examples:

- OpenRouter:

https://openrouter.ai/api/v1 - TogetherAI:

https://api.together.xyz/v1 - Perplexity:

https://api.perplexity.ai/ - Groq:

https://api.groq.com/openai/v1 - Local LM Studio:

http://localhost:1234/v1

API Compatibility

The custom provider implementation uses the following OpenAI API endpoints:

GET /models- For fetching available modelsPOST /chat/completions- For sending chat completion requests

Your provider must support these endpoints with the same request/response format as OpenAI's API.

Troubleshooting

- "Custom provider selected but no API URL configured": Make sure you've set the Custom API URL field

- "Custom API key not set in config!": Make sure you've set the Custom API Key field

- Empty model list: Check that your API key is valid and the URL is correct

- Connection errors: Verify that the provider URL is accessible and supports the OpenAI API format

Changelog v1.0.5.1-release+1.20.6-bugfix-2

- Fixed a lot of bugs that went unnoticed in my first round of testing.

Changelog

- Fixed bug where JVM arguments were not being read.

- Removed owo-lib. AI-Player now uses an in-house config system.

- Fixed API keys saving issues.

- Added a new Launcher Detection System. Since Modrinth launcher was conflicting by it's own variables path system so the QTable was not being loaded. Supports: Vanilla MC Launcher, Modrinth App, MultiMC, Prism Launcher, Curseforge launcher, ATLauncher, and even unknown launchers which would be unsupported by default, assuming they follow the vanilla mc launcher's path schemes.

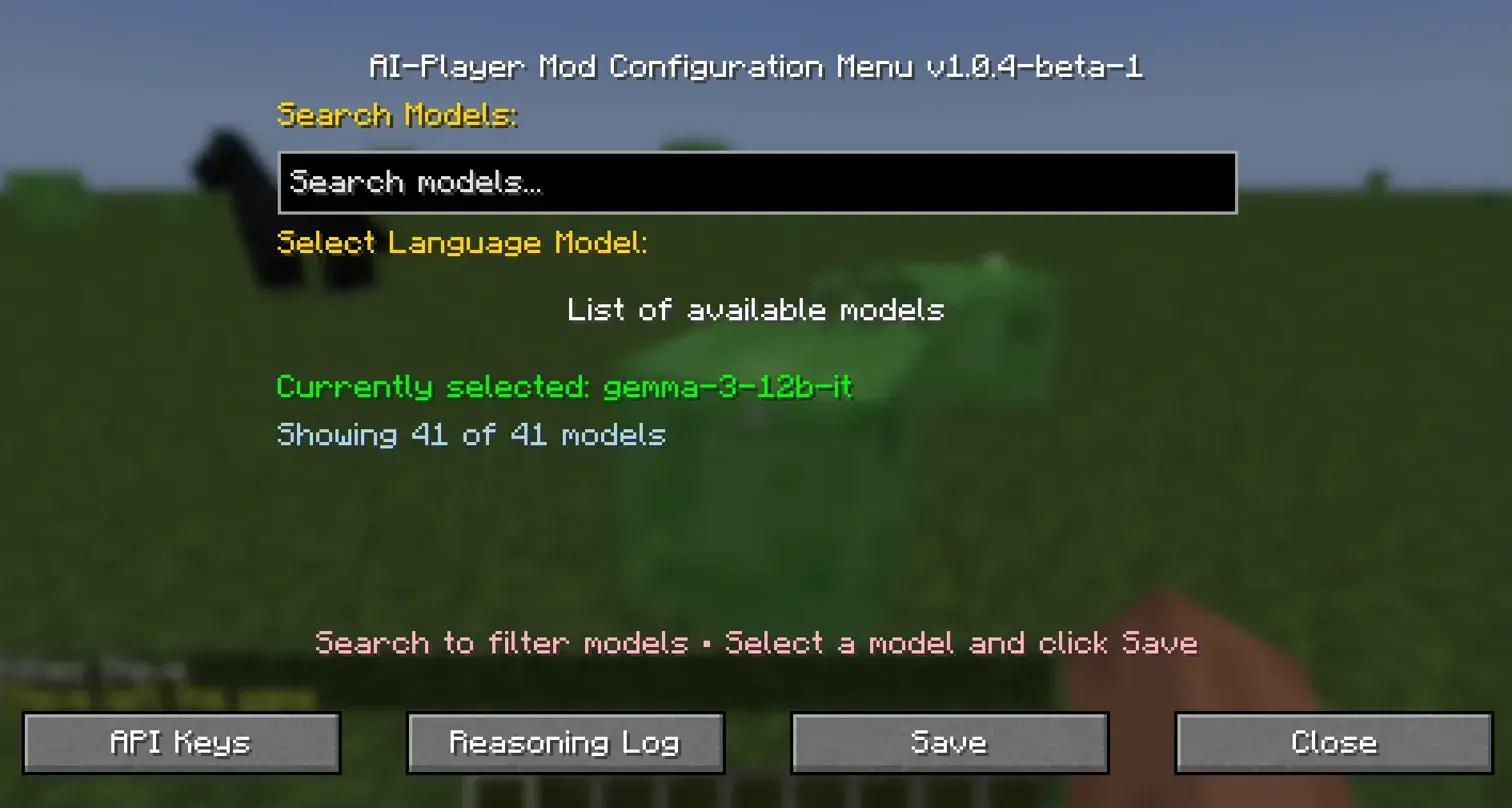

- Revamped the Config Manager UI with a responsive UI along with a search option for providers with a lot of models (like gemini).

AI Player v1.0.5.1 — Release Notes

Overview

AI Player v1.0.5.1 is now live! 🎉

I realized that many of the commitments made in the previous announcement were too ambitious to implement in a single update. To keep development smooth, this release is the first part of a two-part update.

This update focuses on core system rewrites and better AI decision-making, laying the foundation for the next wave of features.

🚀 What's New in 1.0.5.1

🧠 Revamped NLP System

- Fully redesigned Natural Language Processing (NLP) — no more "I couldn’t understand you."

- This is a new and experimental system I’ve been designing and rigorously testing over the last month.

- Results are promising, but not yet up to my personal standards — expect further refinements in future updates.

📚 Rewritten RAG & Database System (with Web Search)

- New Retrieval-Augmented Generation (RAG) system integrated with a database and web search.

- The AI now provides accurate factual information about Minecraft, drastically reducing hallucinations.

- Supported search providers:

- Gemini API

- Serper API

- Brave Search API (in development, will push this to the next patch instead)

🧩 Meta-Decision Layer

- Added a task chaining system:

- You give a high-level instruction → the bot automatically breaks it into smaller tasks → executes step by step.

Current Supported Tasks:

- Go to a location

- Go to a location and mine resources

- Detect nearby blocks & entities

- Report stats (health, oxygen, hunger, etc.)

🔌 API Integrations

AI Player now supports multiple LLM providers (configurable via API keys):

- OpenAI

- Anthropic Claude

- Google Gemini

- xAI Grok

Web Search Tool

If you select the Gemini Search as the web search tool for the LLM, it will use the API key you have set as your LLM provider in the settings.json5 file automatically.

For https://serper.dev/ search, get an api key from serper.dev and then navigate to the config folder in game, open the ai_search_config.json and put the key:

(Note: I couldn’t test all of these myself except the Gemini API since API keys are costly, but the integrations are ready.)

🛠️ Development Notes

- While this update may look small on the surface, designing the systems, writing the code, and debugging took a huge amount of time.

- On top of this, I’ve picked up more freelance contracts and need to focus on my final-year project.

- Updates will continue — just at a slower pace.

📌 Coming Soon in Part 2

Here’s what’s planned for the next patch:

-

⚔️ Combat & Survival Enhancements

- Bot uses weapons (including ranged) to fend off mobs.

- Reflex module upgrades.

- More natural world interactions (e.g., sleeping at night).

-

🌊 Improved Path Tracer

- Smarter navigation through water and complex terrain.

-

🎯 Self-Goal Assignment System

- Bot assigns itself goals like a real player.

- Will initiate conversations with players and move autonomously.

-

😊 Mood System (design phase)

- Adds emotional context and varied behavior.

-

👥 Player2 Integration

- Highly requested — this will be the first major feature of the second update.

A bug fix for the 1.20.4 release port

Changelog v1.0.4-release+1.20.4-bugfix

- Fixed mod dependency metadata

A small bug fix for the 1.20.6 port

Changelog v1.0.4-release+1.20.6-bugfix

- Fixed mod dependency metadata

The 1.20.1 port for the mod.

Changelog v1.0.4-release+1.20.1

- Removed some minor functionality regarding listing the bot on the player menu since 1.20.1 carpet doesn't have the code for it.

- Minor code changes for 1.20.1

- Fixed mod dependency metadata

The 1.20.4 release port for version 1.0.4

Changelog v1.0.4-release+1.20.4

- Removed redundant code and unused imports.

- Changed status to release from beta.

Changelog v1.0.4-release+1.20.6

- Updated codebase for 1.20.6 compatibility.

- Optimized codebase by removing redundant codes and unused imports.

Update 1.0.4-beta-1!

Instead of pushing the update as alpha, I think the mod is now at the stage where I can decisively call it the beta stage.

Changelog

- Fixed server sided compatibility support for play mode!

- Fixed a client side bug of the

/configMancommand not working on the client but on the server.

This marks the last version update for minecraft 1.20.4 as I will be moving up to 1.20.6 from now.

There will be another release of this version for 1.20.6, shortly.

Changelog

Introduced Server sided compatability for training mode.

Play mode for some reason fails to connect to ollama server so I am still working it out, will be done in the next hotfix.

Fixed

- removeArmor command now works.

- will be updated in the decision making logic accordingly in next hotfix.

Changelog v1.0.3-alpha-2

- Updated the qtable storage format (previous qtable is not comaptible with this version)

- Created a "risk taking" mechanism which greatly reduces training time by making actions taken during training more contextual based instead of random.

- Added more environment triggers for the bot to look up it's training data, now it also reacts to dangerous enviornment around it, typically lava, places where it might fall off, or sculk blocks.

- Created very detailed reward mechanisms for the bot during training for the best possible efficiency.

- Fixed the previous blockscanning code, now replaced using a DLS algorithm for better optimization.

Upcoming changes.

- Introduce goal based reinforcement learning(You give the bot a goal and it will try to learn based on how to best achieve the goal you give).

- Switch to Deep-Q learning instead of traditonal q-learning (TLDR: use a neural network instead of a table)

- Create custom movement code for the bot for precise movement instead of carpet's server sided movement code.

- Give the bot a sense of it's surroundings in more detail (like how we can see blocks around us in game) so that it can take more precise decisions. Right now it has knowledge of what blocks are around it, but it doesn't know how those blocks are placed around it, in what order/shape. I intend to fix that.

- Implement human consciousness level reasoning??? (to some degree maybe) (BIG MAYBE)

Some video footage :

mob related reflex actions

https://github.com/user-attachments/assets/1700e1ff-234a-456f-ab37-6dac754b3a94

environment reaction

https://github.com/user-attachments/assets/786527d3-d400-4acd-94f0-3ad433557239

What to do to setup this version before playing the game(assuming you are a returning user) :

1. Make sure you still have ollama installed.

2. In cmd or terminal type `ollama pull nomic-embed-text (if not already done).

3. Type `ollama pull llama3.2`

4. Type `ollama rm gemma2 (if you still have it installed)

5. Type `ollama rm llama2 (if you still have it installed)

6. If you have run the mod before go to your .minecraft folder, navigate to a folder called config, and delete a file called settings.json5

Then make sure you have turned on ollama server. After that launch the game.

Type /configMan in chat and select llama3.2 as the language model, then hit save and exit.

Then type /bot spawn <yourBotName> <training (for training mode, this mode won't connect to language model) and play (for normal usage)

Youtube tutorial video (coming soon)

So after a lot of research and painful hours of coding here's the new update!

What's new :

-

Fixed previous bugs.

-

Switched to a much lighter model (llama3.2) for conversations, RAG and function calling

-

A whole lot of commands

-

Reinforcement Learning (Q-learning).

-

Theortically Multiplayer compatible (just install the dependencies on server side), as carpet mod can run on servers too, but I have not tested it yet. Feedback is welcome from testers on this.

-

Theoretically the mod should not require everyone to install it on multiplayer, it should be a server-sided one, haven't tested this one yet, feedback is welcome from testers.

Bot can now interact with it's envrionment based on "triggers" and then learn about it's situation and try to adapt.

The learning process is not short, don't expect the bot to learn how to deal with a situation very quickly, in fact if you want intelligent results, you may need hours of training(Something I will focus on once I fix some more bugs, add some more triggers and get this version out of the alpha stage)

To start the learning process:

/bot spawn <botName> training

Right now the bot only reacts to hostile mobs around it, will add more "triggers" so that the bot responds to more scenarios and learns how to deal with such scenarios in upcoming updates

A video on how the bot learns and what's new in this patch

# New commands :

Spawn command changed.

/bot spawn <bot> <mode: training or play>, if you type anything else in the mode parameter you will get a message in chat showing the correct usage of this command

/bot use-key <W,S, A, D, LSHIFT, SPRINT, UNSNEAK, UNSPRINT> <bot>

/bot release-all-keys <bot> <botName>

/bot look <north, south, east, west>

/bot detectDangerZone // Detects lava pools and cliffs nearby

/bot getHotBarItems // returns a list of the items in it's hotbar

/bot getSelectedItem // gets the currently selected item

/bot getHungerLevel // gets it's hunger levels

/bot getOxygenLevel // gets the oxygen level of the bot

/bot equipArmor // gets the bot to put on the best armor in it's inventory

/bot removeArmor // work in progress.

Current bugs in this version :

- If the bot dies or is killed off while it was engaged in an action, the code might throw an error on the bot respawn. Temporary fix is a game restart (or server restart).

Will be fixed in upcoming patch.

- The

removeArmorsub-command doesn't work (yet).

What to do to setup this version before playing the game :

1. Make sure you still have ollama installed.

2. In cmd or terminal type `ollama pull nomic-embed-text (if not already done).

3. Type `ollama pull llama3.2`

4. Type `ollama rm gemma2 (if you still have it installed)

5. Type `ollama rm llama2 (if you still have it installed)

6. If you have run the mod before go to your .minecraft folder, navigate to a folder called config, and delete a file called settings.json5

Then make sure you have turned on ollama server. After that launch the game.

Type /configMan in chat and select llama3.2 as the language model, then hit save and exit.

Then type /bot spawn <yourBotName> <training (for training mode, this mode won't connect to language model) and play (for normal usage)

For the nerds : How does the bot learn?

It uses an algorithm called Q-learning which is a part of reinforcement learning.

A very good video explanation on what Q-learning is :

Changelog description

This is the final hotfix version of version 1.0.2 that addresses a lot of broken code, and changing of the mod's workflow at the core.

In this hotfix, once again, a lot of the code has changed.

I will keep it simple in the changelog this time.

Fixed

GUI issue in config manager which didn't show the currently selected language model[FIXED].The bot was not responding to normal conversations[FIXED].

Even improved accuracy with the gemma2 model.

For this patch, users will need to:

1. Go to your game folder (.minecraft)/config and you will find a settings.json5 file.

Delete that

2.(If you have run the previous 1.0.2 version already then) again go back to your .minecraft. you will find a folder called "sqlite_databases". Inside that is a file called memory_agent.db

3. Delete that as well.

4. Install the gemma2 model (ollama pull gemma2) [Required]

Users can choose to keep the llama2 or mistral models and test each other's efficiency.

However the mod has a 100% chance of breaking when llama2 and mistral is used because of the responses they generate.

From a technical perspective

The breaking of the mod happens llama2 and mistral generate very large initial responses whose vector embeddings cannot be generated and thus the mod throws an exception.

Gemma2 however keeps it precise and to the point, thus resulting in a lot shorter response.

Gemma2 also has much more accurate information about minecraft data, like crafting recipes, biomes, mobs, etc till 1.20.5 presumably, as the test results indicate, as compared to llama2 or mistral.

A small update to the version 1.0.2

Thanks to Mr. Álvaro Carvalho, who suggested a few enhancements to the mod regarding the commands system and some inner code changes.

Also this version's icon is fixed and will be recognized by modmanager.

Once again the setup instructions for existing users

1. Go to your game folder (.minecraft)/config and you will find a settings.json5 file.

Delete that

2.(If you have run the previous 1.0.2 version already then) again go back to your .minecraft. you will find a folder called "sqlite_databases". Inside that is a file called memory_agent.db

3. Delete that as well.

4. Install the models, mistral, llama2 and nomic-embed-text

5.Then run the game

6. Inside the game run /configMan to set the language model to llama2.

Then spawn the bot and start talking!

patch 1.0.3 will include backwards compatibility for versions 1.20, 1.20.1 and 1.20.3 and other features as mentioned earlier!

For the general player base.

This patch fixed a major issue where the llama2 model fails majorly at misclassification of the user prompt of a certain type. That part has been taken up by mistral instead.

Aaaand after 2 weeks of a lot of coffee, back pain and brain ache, here's the new update.

At first it may seem that this version doesn't do much, but keep talking with the bot and you will find out :)

To-do: Install three models in ollama. [VERY IMPORTANT]

- nomic-embed-text (ollama pull nomic-embed-text)

- llama2 (ollama pull llama2)

- mistral (ollama pull mistral)

And go to your game folder (.minecraft/config) and delete the old settings.json5 file.

In game, change your selected language model to llama2 for the best performance.

TLDR: The bot is way more intelligent now, can remember past conversations, can store current conversations and then use the data for long term storage, has gotten better at function calling like movement and block detection, no fixed responses, no need of fixed prompt checking, it all happens,

Also the connection to the language model is only made when the bot is spawned in game, thus saving a lot of memory when the bot is not in the game.

Eventually I intend to bring support for custom models on the ollama database.

dynamically, hehe.

Next patch will include more minecraft interaction, such as mining, improved movement, collision/obstacle detection, etc.

Accuracy for detecting correctly what the player wants to say: somewhere between 95-99%

For the nerds.

So, for the tech savvy people, I have implemented the following features.

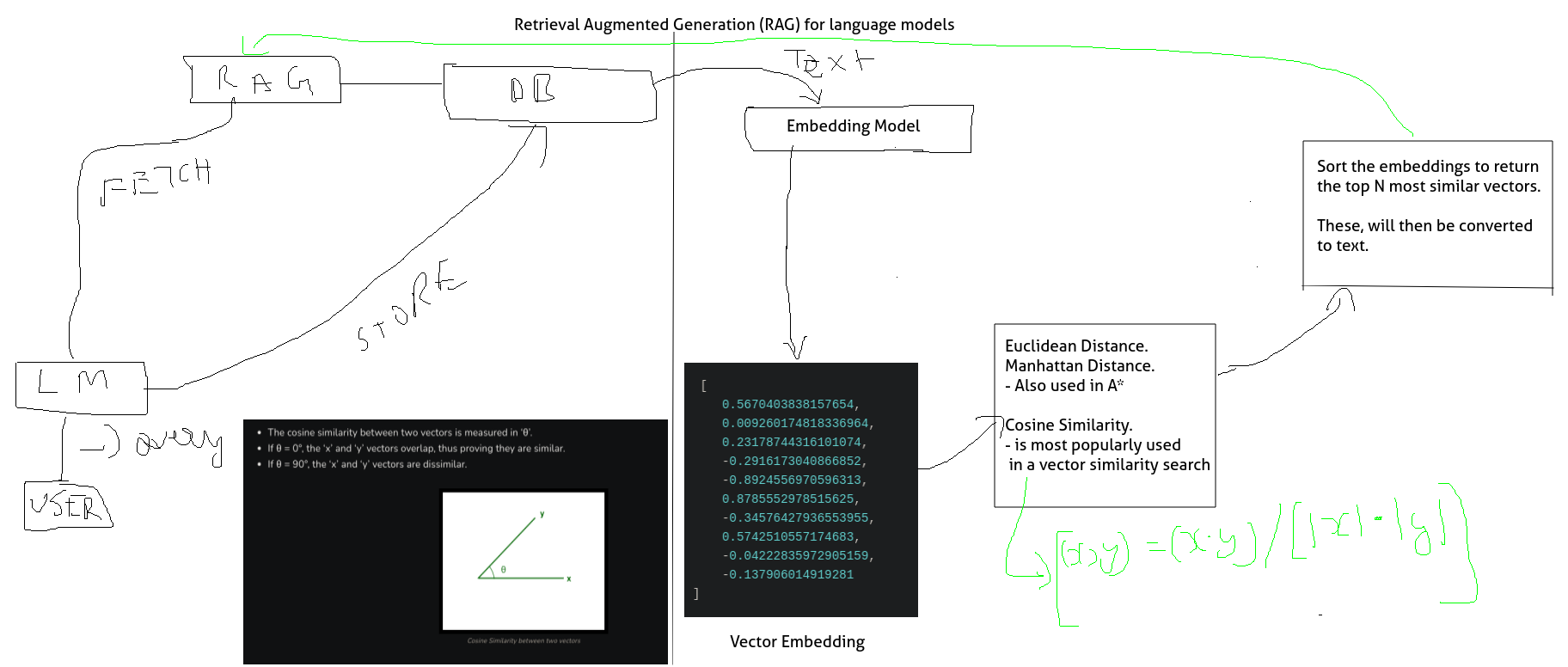

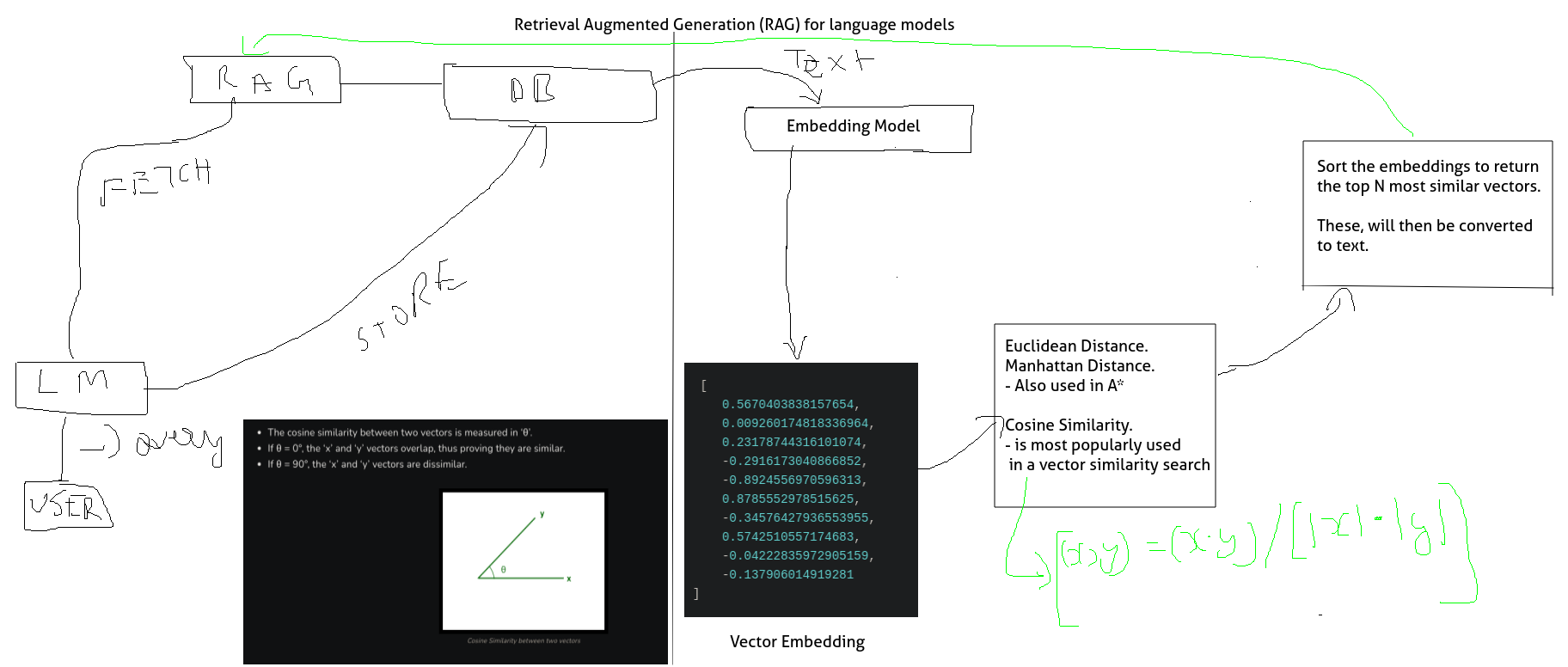

LONG TERM MEMORY: This mod now features concepts used in the field AI like Natural Language Processing (much better now) and something called

Retrieval Augmented Generation (RAG).

How does it work?

Well:

We convert the user input, to a set of vector embeddings which is a list of numbers.

Then physics 101!

A vector is a representation of 3 coordinates in the XYZ plane. It has two parts, a direction and a magnitude.

If you have two vectors, you can check their similarity by checking the angle between them.

The closer the vectors are to each other, the more similar they are!

Now if you have two sentences, converted to vectors, you can find out whether they are similar to each other using this process.

In this particular instance I have used a method called cosine similarity

Where you find the similarity using the formula

(x, y) = x . y / |x| . |y|

where |x| and |y| are the magnitudes of the vectors.

So we use this technique to fetch a bunch of stored conversation and event data from an SQL database, generate their vector embeddings, and then run that against the user's prompt. We get then further sort on the basis on let's say timestamps and we get the most relevant conversation for what the player said.

Pair this with function calling. Which combines Natural Language processing to understand what the player wants the bot to do, then call a pre-coded method, for example movement and block check, to get the bot to do the task.

Save this data, i.e what the bot did just now to the database and you get even more improved memory!

To top it all off, Llama 2 is the best performing model for this mod right now, so I will suggest y'all to use llama2.

In fact some of the methods won't even run without llama2 like the RAG for example so it's a must.

For the general player base.

Aaaand after 2 weeks of a lot of coffee, back pain and brain ache, here's the new update.

At first it may seem that this version doesn't do much, but keep talking with the bot and you will find out :)

To-do: Install two models in ollama. [VERY IMPORTANT]

- nomic-embed-text (ollama pull nomic-embed-text)

- llama2 (ollama pull llama2)

In game, change your selected language model to llama2 for the best performance.

TLDR: The bot is way more intelligent now, can remember past conversations, can store current conversations and then use the data for long term storage, has gotten better at function calling like movement and block detection, no fixed responses, no need of fixed prompt checking, it all happens,

Also the connection to the language model is only made when the bot is spawned in game, thus saving a lot of memory when the bot is not in the game.

Eventually I intend to bring support for custom models on the ollama database.

dynamically, hehe.

Next patch will include more minecraft interaction, such as mining, improved movement, collision/obstacle detection, etc.

For the nerds.

So, for the tech savvy people, I have implemented the following features.

LONG TERM MEMORY: This mod now features concepts used in the field AI like Natural Language Processing (much better now) and something called

Retrieval Augmented Generation (RAG).

How does it work?

Well:

We convert the user input, to a set of vector embeddings which is a list of numbers.

Then physics 101!

A vector is a representation of 3 coordinates in the XYZ plane. It has two parts, a direction and a magnitude.

If you have two vectors, you can check their similarity by checking the angle between them.

The closer the vectors are to each other, the more similar they are!

Now if you have two sentences, converted to vectors, you can find out whether they are similar to each other using this process.

In this particular instance I have used a method called cosine similarity

Where you find the similarity using the formula

(x, y) = x . y / |x| . |y|

where |x| and |y| are the magnitudes of the vectors.

So we use this technique to fetch a bunch of stored conversation and event data from an SQL database, generate their vector embeddings, and then run that against the user's prompt. We get then further sort on the basis on let's say timestamps and we get the most relevant conversation for what the player said.

Pair this with function calling. Which combines Natural Language processing to understand what the player wants the bot to do, then call a pre-coded method, for example movement and block check, to get the bot to do the task.

Save this data, i.e what the bot did just now to the database and you get even more improved memory!

To top it all off, Llama 2 is the best performing model for this mod right now, so I will suggest y'all to use llama2.

In fact some of the methods won't even run without llama2 like the RAG for example so it's a must.

This alpha version of version 1.0.1 includes a lot of content. Well atleast in terms of code it does.

Steve (or the bot/) can now understand what users saying a lot better by using a process called "Natural Language Processing!"

This Natural language processing has been implemented for the sake of getting the in-game bot to execute actions based on what the user says, it is separate from the language model. However I intend to achieve a sort of "synchronisation" which will keep the language model informed of what is going on in-game.

Also I have added block and nearby entity detection.

The bot can now detect if there is a block in front of it, simply ask it using the sendAMessage command!

There's 99% chance that the bot will understand the intention and context of your message. Hope to achieve 100% soon.

Initially when you spawn the bot, it takes a minute or so for it to face you correctly, since it's spawn position will be fixed by the game by teleporting it somewhere near you after spawning is done (This is not the same as seen in game when you spawn the bot and instantly teleports to you).

After that the bot will accurately face the nearest entity in front of it. The range is 5 blocks in the X, Y and Z axes. To make it more realistic, I have made it so that the bot can't detect entites behind it.

Check github for the sample footage of this version.

Click on the view source button, keep scrolling till you find the XZ pathfinding video.

This version of the mod includes a pathfinding algorithm on the XZ axis for the bot.

It is recommended to test the pathfinding on a superflat world with no mobs spawning.

Try to keep the destination coordinates relatively under or equals to 50 on the XZ axes, any more than that will take some time to calculate and the game might appear frozen, hence the tag alpha given to this version

Also the commands system has been fully revamped

I uploaded this alpha version just to prove that I did not give up on this project lol.

Expect periodic releases.

Main command

/bot

Sub commands:

spawm <botName> This command is used to spawn a bot with the desired name. ==For testing purposes, please keep the bot name to Steve==.

walk <botName> <till> This command will make the bot walk forward for a specific amount of seconds.

/goTo <botName> <x> <y> <z> This command is supposed to make the bot go to the specified co-ordinates, by finding the shortest path to it. It is still a work in progress as of the moment.

/sendAMessage <botName> <message> This command will help you to talk to the bot.

/teleportForward <botName> This command will teleport the bot forward by 1 positive block

/testChatMessage <botName> A test command to make sure that the bot can send messages.

Example Usage:

/bot spawn Steve

Added custom code for spawning bots for players running in offline mode.

And a lot more bug-fixes.